LCDs And Pico Memory Management

It may not be quite true to say modern computers have unlimited processing power and memory, but the high performance and huge storage space I’ve gotten used to does make things a lot easier.

Yet one of the fun things about programming for the Pico is in working within tight constraints. The Pico is advertised as “2MB of Flash memory”, which isn’t much at all by modern standards. And for many purposes that figure doesn’t really represent usable memory, as “Flash memory” is where the firmware sits, and hence isn’t readily available for programmers to use.

The important figure for the Pico is 256kb “on-chip SRAM”, which is effectively the space available both for storing program code and for running it. The latter is important and very different to developing on most devices (or most devices that I’ve worked on) where processor memory and storage memory are generally separate.

The limitation hit me hard with the next little project I started on: trying to display live tube arrivals on a tiny LCS screen.

Displaying Live Tube Arrivals

I ran into a few memory issues making the e-ink weather display and had to go through my code and eliminate some pretty lazy mistakes I’d made whilst learning the platform.

But that’s nothing compared to what I’ve had to learn on the most recent mini-project which, on the face of it, seemed very simple: to call the TFL (Transport for London) API and display the next few Central Line departures from our local Tube station.

I’d bought a Waveshare 1.3 inch LCD displays with something else in mind, but I wanted to do something a little less amitious first and “query an API and display some numbers” seemed simple enough. Au contraire, as I was to learn.

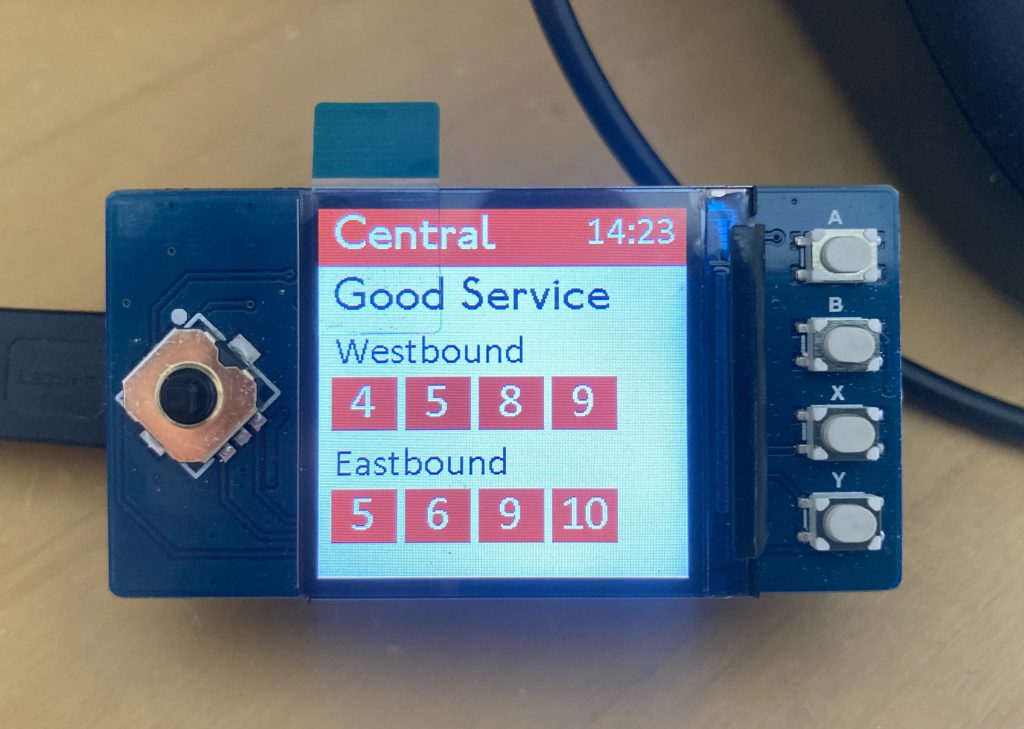

Before I go into the problems, this is what the result looks like:

The buttons and the little toggle do nothing at the moment; the whole app is just a display that refreshes every 60 seconds.

In terms of how it works, it’s three or four main steps, depending on how you look at it:

- Initialise Wifi, set datetime etc

- Make an API call to retrieve Central Line status. (“Good Service” as shown above.)

- Make an API call to retrieve arrivals at a single (hardcoded) Tube station

- Draw to the screen

Did it work? Did it heck.

Refactoring For Memory Management

The problems came due to the requirements of the Waveshare LCD.

It has a display size of 240×240 pixels and 65k colours, which means that each pixel on the screen requires two bytes of memory. Remember that with the Pico there’s no such thing as separate graphics storage so all of this has to be done within the 256kb of on-chip RAM. A 240×240 display has 57,600 pixels, and if each pixel requires 2 bytes then 115,200 bytes of our available memory has to be allocated to screen display.

More precisely: 115,200 bytes of memory needs to be available at the time the screen buffer is created. This is just under half the available memory, but then of the 256kb RAM in the Pico, actually only about 200kb (or a little under) ever seems to be usable.

Therefore close to 60% of available memory needs to be free just to draw some text and rectangles to a tiny 1.3 inch LCD.

Basically, as soon as I tried to run the first end-to-end version of my code, I ran into all kinds of memory errors. Or in particular, I got network errors in making the API call, and after some digging it turned out that memory errors in the urequests module often manifest as network errors in the output, or things like:

OSError: (-29312, 'MBEDTLS_ERR_SSL_CONN_EOF')which does not look like a memory error to me, but lack of memory was the cause.

The reason for this was that memory for the screen was being initialiased early in the code, as a byte array:

super().__init__(bytearray(height * width * 2), width, height, framebuf.RGB565)Straight away this allocates 115,200 bytes of memory that will be used for the screen, which unfortunately left very little for the API calls that followed.

I went down some semi-productive rabbit-holes that resulted in me freezing a lot of my helper modules into the Pico’s firmware. It wasn’t as hard as I thought it would be, once I stopped trying to build the firmware on Windows, and it meant that a few 10s of k of program were now going to be run from inside the Pico’s secret 2MB Flash memory and not take up valuable 0n-board memory space.

It still didn’t work, of course. Code never does.

The next thing was to move things around in the code. This got the API calls working, but then screen initialisation failed.

I added some debugging code using the built-in Garbage Collector, which every Pico programmer must be familiar with, and cleared some run-time memory by running inline garbage collection gc.collect() calls. Coupled with a bit of code refactoring to help the garbage collector do its job, and switching https calls to the API to http (yes, I know, but it worked), I freed up quite a bit of memory.

Yet it still failed, despite my debug output showing a whopping(!) 178,464 bytes of free memory right before I try to initialise the screen – which in theory only needs 2/3rds of that.

I needed 115,200 bytes, and I had 178,464 to spare, so what wasn’t it working?

Going Mad With Memory Fragmentation

I had to go further down the rabbit hole and learn about memory fragmentation. Adding a few more debug lines made it certain that fragmentation was the problem.

micropython.mem_info()is the line of code to add and, indeed, it revealed that although I had plenty of free memory, it was “scattered” all over the 256kb available.

Memory fragmentation is not something many programmers, especially lazy web programmers like me, have needed to deal with for decades. So what is it?

The best analogy I can think of is to consider the Pico’s memory like a bookshelf. Every time Micropython needs some memory to store something, whether an object, a string, or something big like the JSON results from an API call, it looks for the first available space on the shelf in which it can fit the object. (I’m assuming it’s the first space, and if not technically true it seems a viable mental model .)

Slowly the shelf fills up, adding objects big or small from left to right as they arrive, and if you keep adding things eventually you’ll run out of shelf space. That’s what had happened in the first un-optimised version of my code.

Sometimes shelf space get cleared when an object is no longer needed. Micropython will try to manage this space itself through automatic garbage collection. When memory usage reaches a certain threshold it will look through the shelf and throw away anything that it’s sure it doesn’t need. Things like strings that were declared inside a method call and then never used again, or the raw results from an API call that have been processed.

Running gc.collect() forces this garbage collection process to run, meaning that even if Micropython doesn’t think it’s running low of memory you can force it to sort its bookshelf out. You’ll likely to this because you know you’re about to ask it to store something big and you want to free the maximum amount of space.

The problem is that it only really does half a job of sorting its bookshelf out, because although it will clear out unwanted items it leaves gaps behind in the process.

Let’s say there are three books on the shelf and you ask the garbage collector to decide which ones to keep. The book on the left is the complete works of Dickens in a single volume and nobody’s ever going to read it, so Micropython throws it out (or takes it to the charity shop if the idea of throwing a book away quite rightly offends you). Eight inches of shelf space have been freed for future use!

The book to the right of it might be a little paperback, but it’s very popular so it will have to stay there.

To the right of that are the complete works of Shakespeare, again in a single volume. I’m not sure many people read those things outside of school, so out it goes! Another ten inches of shelf space cleared!

So by clearing out eight inch and ten inch tomes we’ve now got eighteen inches of shelf space free. That should be enough for all of Game of Thrones (if for some reason they were published in a single volume and needing a whopping twelve inches of free space).

Easy, right? But not so fast: we don’t have a twelve inch space where it can fit. We have eighteen inches of space, which is more than enough, but that little paperback is sitting right in the middle of it. Wouldn’t it be ideal of Micropython could move that paperback to the left, or to the right, so it could fit Game of Thrones in? It would be great if it did, but unfortunateley it won’t.

The problem is that by adding a popular paperback in exactly the wrong place we’ve introduced fragmentation, and as far as I can tell when you have it you can’t get rid of it. (Unless you can work out how to throw the paperback away without upsetting anyone.)

In terms of code, what I’d written did the following:

- Allocate space for the first API call

- Garbage collect, which took us almost back to as good a state as the start

- Allocate space for the second API call

- Process the API call and return the results

- Garbage collect again. What this did was remove the API call data but the space just after it was now taken up by the results of processing that data. This was my small, popular paperback

- Try to allocate a large contiguous space for the display. Just like trying to fit Game of Thrones on the shelf, I had plenty of space, but it wouldn’t fit in the space before the API results, and it wouldn’t fit in the space after the API results either. I had plenty of space, but I had something blocking right in the middle

What To Do About Fragmentation

If I had a gripe about the Pico/Micropython, it’s that it requires rather tight memory management without offering the tools to do it.

In an ideal world, I would be able to specify where objects are stored at the time that they’re created. Imagine if I could specify to stack small books from the right hand of the shelf and large books that are often recycled from the left. I’d be much less likely to be short of space due to a small book occupying a large empty space in the middle of the shelf. Maybe I could do that if I got into the firmware myself, but I’m not that clever.

The Micropython docs encourage allocating space for large items up-front, before fragmentation can occur, for precisely that reason. (The docs also contain plenty of other useful tips.) In this scenario my screen’s bytearray would be allocated as part of initialisation, as I first had it in my code. The problem is that the API calls then don’t have enough space to run because I’ve effectively taken 115,200 bytes of memory away from the Pico permanently. My problem with declaring the bytearray first is that the problem isn’t that I don’t have enough memory to achieve what I want, because I do, but I don’t have enough memory if I trie to allocate it all at the same time.

Managing fragmentation requires quite fine-tuning of the code. In my case, I created dictionary objects and empty strings before I made the second API call, effectively forcing the paperback to be put on the shelf before either Dickens or Shakespeare took up all the space.

The problem with this approach is that memory is allocated in all kinds of places that can be hard to spot, such as in string concatenation. If, for instance, I want to build a custom dictionary as the result of processing an API call, then I create strings every time I create a dictionary key. These could potentially fragment memory.

I wish I could force Micropython to move everything along the shelf and consolidate free space, or that I could tell it exactly where to put things as it needs to stack them, but I can’t. So as it stands, if I need a big chunk of memory for something I have to be very careful about how I use that memory in the run-up to needing it.

What Did I Learn?

I learnt quite a few specific things:

- The order that things are declared in in code can matter as much as the size of them

- HTTP urequest calls seem less demanding on the system than HTTPS

- Running gc.collect() after a urequest call is more-or-less mandatory in order to reclaim memory in the most efficient way possible, even if the results of the call are still available. This is because it seems garbage can be created inside Micropython modules (which seems obvious when you think about it but wasn’t to me)

- Putting things in small, discreet functions, so that variables have very limited scope, is not only good practice overall but makes garbage collection much easier

What Next?

As I said above, I hadn’t intended this display to be used for this purpose, but I wanted a quick win. It turned out to be anything but quick, but then that seems to be the way with microprocessors.

What I’ve learned has changed my opinion about what to do with it next. To be useful this really needs a bigger display, such as this 2 inch LCD by Waveshare. The problem with this is that at 320×240 bytes it requires 33% more memory, or a whopping 153,600 bytes. That’s over 75% of all the memory the Pico has available, and I feel that the 115,200 bytes I’m already allocating is taking me close enough to the limit anyway. Although I’ve tried allocating the 153,600 bytes at the same stage in the code and it seems to work… But still: it might be fragile.

Knowing that, the 1.8 inch display looks a better prospect. It’s just about big enough as a screen, and only .2 of an inch less than the bigger display, although it would be nice to have some buttons built-in like the 2 inch display does. But at a 160×128 resolution which, on the face of it, seems like a major step down, it only needs 40,960 bytes of memory for the screen display, which is less than half the 1.3 inch screen. Maybe less really is more.

That adds a fourth item to “What did I learn?”: that displaying anything on a colour screen with a Pico is a really big deal.